As an Oracle specialist you’ve probably heard of SQL Trace and it’s brother tkprof. SQL Trace generates a low level trace file that has a complete chronological record of everything a session is doing and waiting for when it “talks” to the database. TKPROF on the other hand takes that trace file and aggregates all of the low level details in an easy to read report. This report can then be quickly analyzed to find the root cause of the slow performance.

There are a lot of samples online showing the use of TKPROF in a lab environment. But I haven’t seen one that actually shows you a real use-case similar to what you’ll face on your job. So instead of giving you all possible options of turning on SQL Trace and all possible flags TKPROF utility accepts I’ll show you exactly how and when I use it with a real example. Sounds good?

When Not To Use TKPROF

First, lets cover when TKPROF is not a good option. Do not use TKPROF to analyze short lived sessions that are typical on a very busy website. You are much better off looking at ASH Monitoring. It’s because only ASH can give you an aggregated view across all sessions and what they are waiting for. It can also pin point the SQL_ID across all of these sessions that is generating the most I/O waits. So use ASH when you are troubleshooting a performance problem that is across 99% of all sessions in the database. An example of this would be when you get a call from your dev-ops team saying that their response time from the database has gone up substantially in the last 15 minutes.

What TKPROF is Good For

My #1 use-case for TKPROF is when I am tracing a bad performing batch job. Batch jobs work on a large set of data either looping over each record or performing a set operation on all of them. And for this reason a batch job is usually long running and a developer will typically have an expectation when it should complete. When a job doesn’t complete on time – you will either get a call to look into the problem or your monitoring system will alert you that there is a long running process consuming large resources on the database server.

In either case you are now tasked with finding the root cause of a problem. And sometimes your monitoring system will give you enough details to pin point the problem (usually a single poorly written SQL). But there are cases when you need to dig deep. There could be multiple poorly performing SQL statements that are switching back and forth making it difficult to tell which one is the culprit. And in these instances SQL Trace and TKPROF really come in handy, so lets go over the whole process now.

Identify SID/SERIAL

First we need to identify the SID/SERIAL# of the job so we can enable SQL Trace on it. There are couple of ways you can do this:

OPTION-A: If your monitoring system picked up the poorly performing session SID/SERIAL# – then move on to the next step (Enable SQL Trace), otherwise choose one of the two options below.

OPTION-B: I always like to get on the actual database node and check how much damage this sessions is really doing. Most of the times the poorly performing batch job will be burning up CPU and I/O. This makes it very easy to find it using the following shell command which lists the top 10 process ids (PIDs) currently active on the server:

ps -eo pcpu,pid,user,args | sort -k 1 -r -n | head -10

the above snippet works on Linux. Here’s an equivalent for SUN Solaris:

/usr/ucb/ps -aux | head

I usually save this snippet in a file called topas and put it in my user’s personal bin directory so I can execute it without the need to remember the long syntax. BTW, the reason I call it topas is because there is a great utility on IBM AIX that does exactly this function and it’s called topas.

TIP: If you are on Oracle RAC, you will need to first identify which node the job is running on. I prefer to constrain batch jobs to run on a specific node using dedicated connections. This makes it much easier to find them later (I create a custom DB SERVICE and ask developers to use it when making connection to the database).

Here’s a sample output of the above snippet that clearly shows that the PID (4089) is the culprit we need to trace (it’s consuming the most amount of CPU on the server):

Once we have the PID we can find SID using the following sql:

clear col

set head on

set pages 60

set lines 300

set trims on

set tab off

col sid format 9999

col serial# format 999999

col username format a15

col machine format a15 trunc

col osuser format a12

col maction format a45

col status format a12

col spid format a10

col process format a10

col event format a30 trunc

col SECONDS_IN_WAIT format 9999 heading SECW

select s.sid,s.serial#,s.username,

s.status,s.osuser,s.machine,s.process,s.event,

s.SECONDS_IN_WAIT,

p.spid, s.module||' '||s.action maction

from v$session s

, v$process p

where (s.process='&&1' OR

s.paddr = (select addr

from v$process

where spid='&&1'))

and s.paddr = p.addr;

exit;

save the above script in /tmp/pid.sql and call it from sqlplus as follows:

PID=<pid you got from topas>

sqlplus -s "/ as sysdba" @/tmp/pid.sql $PID

It should produce a report giving you the SID/SERIAL# for the batch job in question.

OPTION-C: What if you don’t know which database node the job is running on? Or, what if your DB server is so utilized by other processes that topas doesn’t put the runaway job at the top of it’s list? In this case, I ask the developers to give me the name of the machine the job was submitted from and optionally the CLIENT_IDENTIFIER they use for the process. I then use the following shell script to find the SID/SERIAL for this job:

#!/bin/ksh

# $Id: msid.sh 30 2016-01-07 23:36:07Z mve $

# Copyright 2016 HASHJOIN (http://www.hashjoin.com/). All Rights Reserved.

NODE=$1

CID=$2

if [ ${NODE}"x" == "x" ]; then

print -n "Enter machine name: "

read NODE

print

fi

if [ ${CID}"x" == "x" ]; then

CLIENT_ID=""

else

CLIENT_ID="or s.CLIENT_IDENTIFIER like '${CID}'"

fi

sqlplus -s /nolog <<EOF

connect / as sysdba

set head on

set pages 60

set lines 300

set trims on

set tab off

col inst_id format 99 heading I#

col sid format 9999

col serial# format 999999

col username format a15

col machine format a16 trunc

col osuser format a12

col maction format a20 trunc

col spid format a10

col process format a10

col LOGON_TIME format a13

col event format a30 trunc

col seconds_in_Wait heading sw format 99999

select s.inst_id, s.sid,s.serial#,s.username,

s.status,s.osuser,

to_char(s.LOGON_TIME,'mon-dd hh24:mi') LOGON_TIME,

s.machine,s.process,

p.spid, s.module||' '||s.action maction,

event, seconds_in_Wait

from gv\$session s

, gv\$process p

where s.machine like '${NODE}' ${CLIENT_ID}

and s.paddr = p.addr

and s.inst_id = p.inst_id;

exit;

exit

EOF

save the above code in /tmp/msid.sh and then execute it as follows:

chmod +x /tmp/msid.sh

/tmp/msid.sh MACHINE CLIENT_ID

where MACHINE is a partial or full name of the machine the job was submitted from and CLIENT_ID is the value of v$session.CLIENT_IDENTIFIER that was set on the session by the developer using dbms_session.set_identifier procedure. NOTE that CLIENT_ID is completely optional – you can just use MACHINE parameter and completely omit the CLIENT_ID.

For example, lets say the hostname is dwhost01.dc.mydomain.com and CLIENT_IDENTIFIER is dw_calculate_revenue_daily_job, you can use the following call:

/tmp/msid.sh dwhost%.dc1% %calculate_revenue%

Alright! So at this point we have the SID/SERIAL#, we can finally turn the SQL Trace ON for this session. Now, let me pause here for a second and bring your attention to how much ground we already covered! And we haven’t even touched on the subject of this article – TKPROF. This just shows how much additional know-how you need before you can even touch this tool. Ok, lets continue to the next step of enabling SQL Trace.

Enable SQL Trace

In the previous section we figured out the SID/SERIAL# for the slow running batch job. And now we can finally enable SQL Trace for this session. After the SQL Trace is turned on – Oracle will write out all the waits and calls this session is doing to a trace file on the database server. We’ll then use TKPROF to analyze this trace file and generate a human readable report to review.

There are way too many options of enabling SQL Trace and for this reason I’ll only show you one way to do this – using set_ev procedure of the dbms_system package – here’s the syntax:

exec sys.dbms_system.set_ev(SID,SERIAL#,10046,12,'');

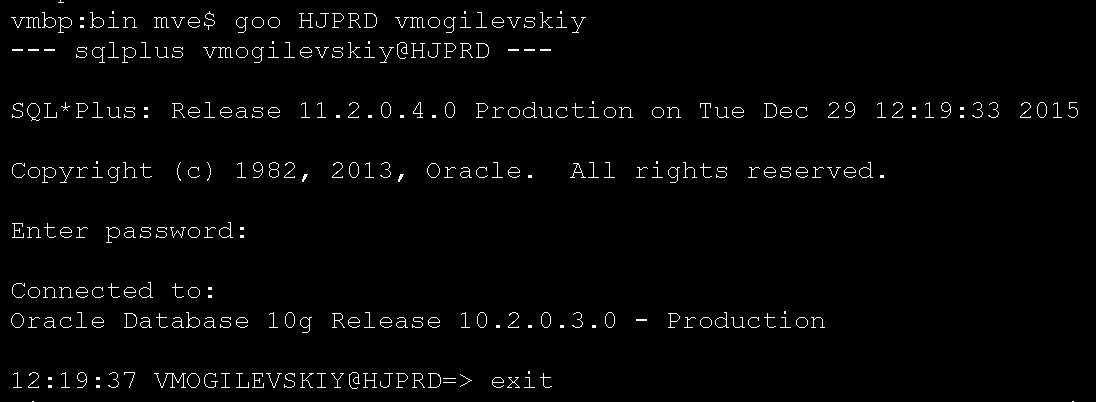

For example lets say that the SID and SERIAL# we gathered are 3927 and 22 respectively and that my DBA account on this server is vmogilevskiy. Here’s the process then:

- login to the database node where we found this session via

sqlplus(I like to be on the db node directly because the next step is to look at the trace file). - grant vmogilevskiy execute on

dbms_system. - connect as vmogilevskiy and call

set_evprocedure giving it SID/SERIAL# as parameters.

Here’s the code:

sqlplus /nolog

connect / as sysdba

grant execute on dbms_system to vmogilevskiy;

connect vmogilevskiy;

exec sys.dbms_system.set_ev(3927,22,10046,12,'');

NOTES: Grant execute only needs to be done once, but I still wanted to list it here in case your DBA account doesn’t yet have execute privilege on dbms_system. Trace level (12) will show all bind variable in the raw trace file including recursive sql ran by SYS – that’s my preferred trace level.

Now we are finally ready to use TKPROF!

TKPROF the Trace File

In the previous step we turned on the SQL Trace and Oracle is already writing out all the waits and calls this session is doing to a trace file on the database server. Our next step is to find the trace file and run tkprof on it. This trace file will be located in the udump directory directly on the database server and part of it’s name will contain thegv$process.SPID value.

It’s also almost always the case that the trace file we are after is the last one modified in the udump directory. So running a simple ls -lta | head to list the last 10 modified files will most likely put it at the top of the list.

For example, in my sample the SPID=32262 and the trace file name is repdb_ora_32262.trc:

oracle@dwdb01~ cd /oracle/orabin/admin/repdb/udump/

oracle@dwdb01~ ls -lta | head

total 930224

-rw-r----- 1 oracle oinstall 12844373 Dec 3 15:43 repdb_ora_32262.trc

drwxr-xr-x 2 oracle oinstall 118784 Dec 3 15:41 .

-rw-r----- 1 oracle oinstall 275441 Dec 3 00:30 repdb_ora_8320.trc

-rw-r----- 1 oracle oinstall 1296 Dec 3 00:22 repdb_ora_8259.trc

-rw-r----- 1 oracle oinstall 136386 Dec 2 00:37 repdb_ora_8365.trc

-rw-r----- 1 oracle oinstall 272785 Dec 2 00:30 repdb_ora_8313.trc

-rw-r----- 1 oracle oinstall 137822 Dec 1 00:29 repdb_ora_8291.trc

-rw-r----- 1 oracle oinstall 1258 Dec 1 00:23 repdb_ora_8271.trc

-rw-r----- 1 oracle oinstall 1296 Dec 1 00:23 repdb_ora_8238.trc

And to make sure it’s the right file we can quickly take a peek at what’s being written to it using tail -f command:

oracle@dwdb01~ tail -f repdb_ora_32262.trc

Lets CTL-C out of the tail -f and run the tkprof to generate the human readable report which should give us what we are after (the top consuming SQL statements for this session). And believe it or not – to answer this question using TKPROF all you need is two parameters: trace file and output file:

tkprof repdb_ora_32262.trc repdb_ora_32262.out

Really, that’s all there is to it! Because now that we have the output file generated by tkptof (repdb_ora_32262.out) we can use a simple grep command to list the total elapsed time for each of the SQL statements. The value we are after is listed in the 4th column – see below (note: I shortened the number of columns so they fit on this screen):

oracle@dwdb01~ grep "total" repdb_ora_32262.out

Binary file repdb_ora_32262.out matches

oracle@dagdb01~ strings repdb_ora_32262.out | grep "total"

total 8002 1.63 185.22 15297 34195

total 4002 0.65 63.31 5940 12334

total 2001 1.86 1.83 0 386

total 8002 0.61 31.20 4004 12705

total 12000 0.20 0.18 0 0

total 800 0.16 0.16 0 801

total 2000 0.73 0.71 0 281

total 2000 0.66 0.67 0 257

total 2000 0.68 0.66 0 228

total 2000 0.80 0.81 0 249

total 4000 0.31 0.31 0 8051

total 4000 0.05 0.05 0 0

total 40 0.02 0.02 0 0

total 80 1.29 1.26 0 54040

total 87 0.00 0.00 0 122

total 18 0.00 0.00 0 54

total 0 0.00 0.00 0 0

total 51032 9.74 286.44 25241 123703

oracle@dwdb01~

And in this case the highest elapsed time is 185.22 (note: the last row with 286.44 is just a total for all sql). Armed with this information we can open the trace file using vi repdb_ora_32262.out and then search for 185.22 (/185.22) which will bring us to the following block of trace file:

Now that we have the top SQL for this job – we can either send our findings to the developer team or take it one step further and get an EXPLAIN plan for it to see if it’s missing an index. To get an explain plan using TKPROF simply append one more parameter to it’s call explain=username/password (NOTE: the username should have access to the objects in the sql you are after):

tkprof repdb_ora_32262.trc repdb_ora_32262_explained.out explain=user/password

this will produce an EXPLAIN PLAN next to the SQL we are after directly in the output file.

Finally, there is one last thing left to do – turn off the SQL Trace for the session – otherwise you are running a risk of consuming all space on the database server it if continues to run. To turn off SQL Trace I use the following procedure:

exec sys.DBMS_SYSTEM.SET_SQL_TRACE_IN_SESSION(sid,serial#,false);

and in this example the call would be as follows:

exec sys.DBMS_SYSTEM.SET_SQL_TRACE_IN_SESSION(3927,22,false);

And that’s all there is to it! Happy tkprof’ing! If you found this article helpful and would like to receive more like it as soon as I release them make sure to sign up to my newsletter below:

SUBSCRIBEVitaliy Mogilevskiy January 7, 2016

Posted In: Operations, Scripts